|

Zhenqi He I am a first-year PhD student at the Hong Kong University of Science and Technology (HKUST), supervised by Prof. Long Chen. Before that, I obtained my M.S. and BSc degree from the University of Hong Kong, where I was advised by Prof. Kai HAN in Visual AI Lab. I am fortunate to have internships at Hong Kong Observatory. Email / Google Scholar / X / ResearchGate / |

|

Preprint |

|

Category Discovery: An Open-World Perspective

Zhenqi He, Yuanpei Liu, Kai Han; Under Review [Paper] We survey Category Discovery (CD)—learning to group unlabeled data with potentially unseen classes alongside limited labeled data. We propose a taxonomy spanning NCD, GCD, and real-world variants (continual, long-tailed, federated, etc.), review methods across three core components (representation learning, label assignment, class-number estimation), and provide unified benchmarks. Our analysis shows benefits from large pretrained backbones, hierarchical/auxiliary signals, and curriculum-style training, and surfaces open problems in robust label assignment, reliable class-number estimation, and scaling to complex multi-object scenarios. |

Selected PublicationsMy research interests include Open-World Learning, and Multi-modal learning. |

|

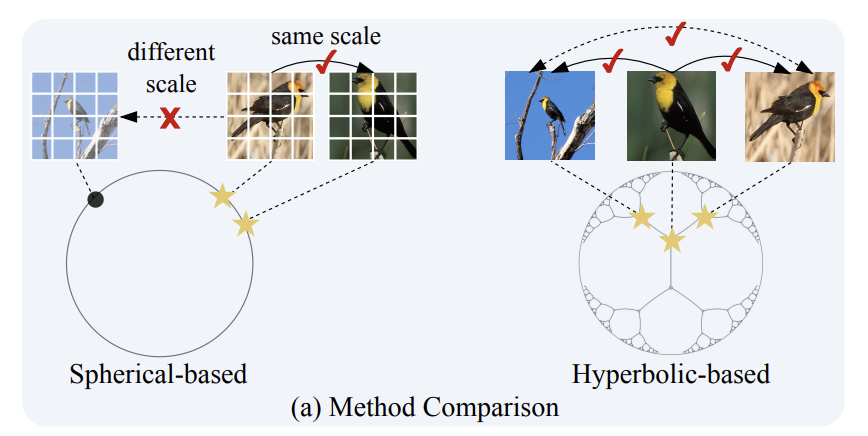

Hyperbolic Category Discovery

Yuanpei Liu*, Zhenqi He*, Kai Han (*: Equal Contribution); CVPR, 2025 [Paper] / [Code] We introduce HypCD, a simple Hyperbolic framework for learning hierarchy-aware representations and classifiers for generalized Category Discovery. HypCD first transforms the Euclidean embedding space of the backbone network into hyperbolic space, facilitating subsequent representation and classification learning by considering both hyperbolic distance and the angle between samples. |

|

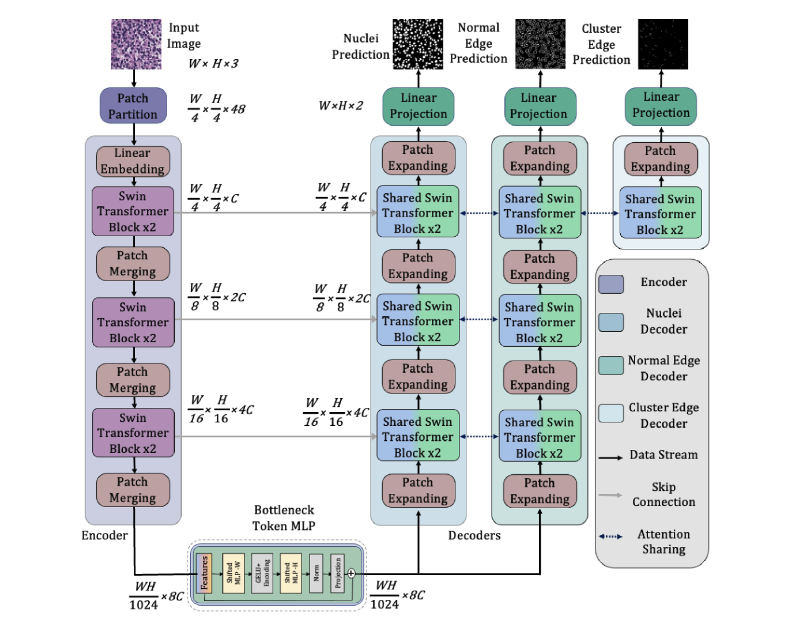

TransNuSeg: A Lightweight Multi-Task Transformer for Nuclei Segmentation

Zhenqi He, Mathias Unberath, Jing Ke, Yiqing Shen; MICCAI, 2023 [Paper] / [Code] This paper proposes a lightweight multi-task framework for nuclei segmentation, namely TransNuSeg, as the first attempt at an entirely Swin-Transformer driven architecture. Innovatively, to alleviate the prediction inconsistency between branches, we propose a self-distillation loss that regulates the consistency between the nuclei decoder and normal edge decoder. And an innovative attention-sharing scheme that shares attention heads amongst all decoders is employed to leverage the high correlation between tasks. |

|

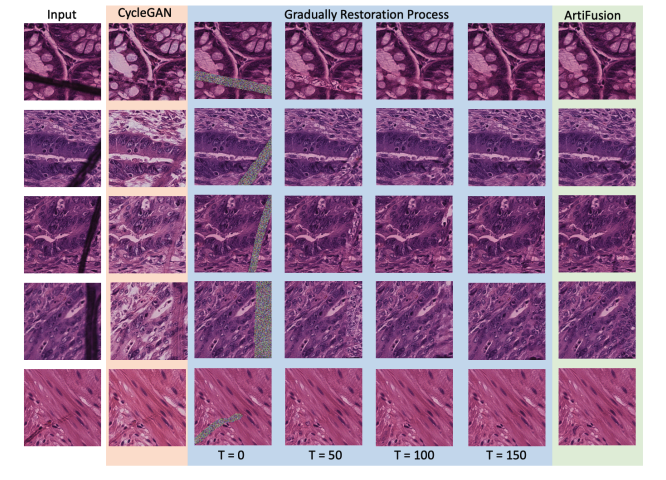

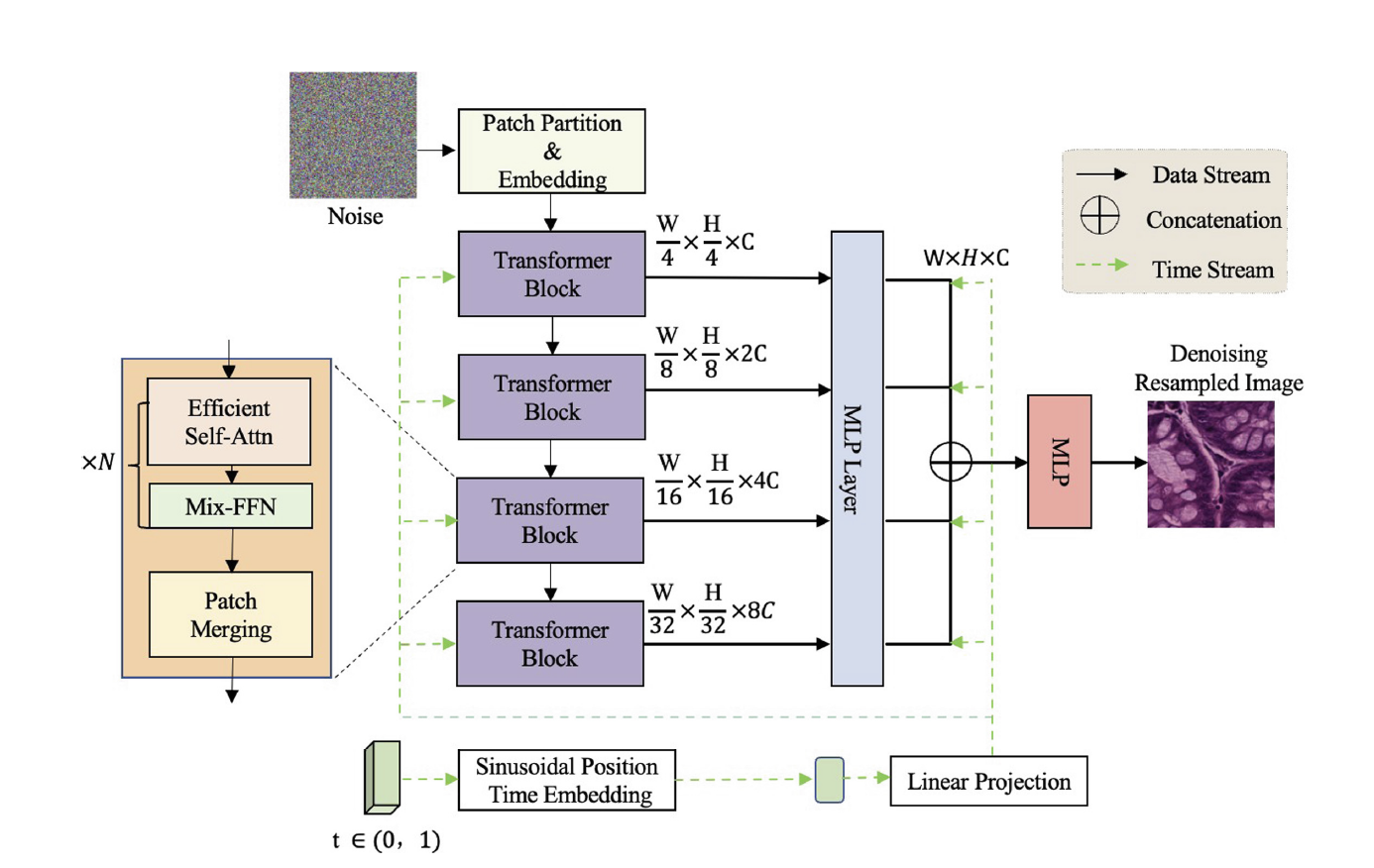

Artifact Restoration in Histology Images with Diffusion Probabilistic Models

Zhenqi He, Junjun He, Jin Ye, Yiqing Shen; MICCAI, 2023 [Paper] / [Code] This is the first attempt at a denoising diffusion probabilistic model for histological artifact restoration, called ArtiFusion. Specifically, ArtiFusion formulates the artifact region restoration as a gradual denoising process, and its training relies solely on artifact-free images to simplify the training complexity. Furthermore, to capture local-global correlations in the regional artifact restoration, a novel Swin-Transformer denoising architecture is designed, along with a time token scheme. Our extensive evaluations demonstrate the effectiveness of ArtiFusion as a pre-processing method for histology analysis, which can successfully preserve the tissue structures and stain style in artifact-free regions during the restoration. |

|

Histology Image Artifact Restoration with Lightweight Transformer and Diffusion Model

Chong Wang, Zhenqi He, Junjun He, Jin Ye, Yiqing Shen; AIME, 2024 [Paper] / [Code] In this paper, we propose a lightweight transformer based framework for histological artifacts restoration. In comparison to existing generative adversarial network (GAN) based solutions, our method minimizes changes in morphology while maximizing preservation of the stain style during the restoration of the artifact. By providing a more reliable and accurate restoration of artifact-affected areas, our model facilitates better analysis and interpretation of histological images, thereby potentially improving the accuracy of tumor diagnosis and treatment decisions. |

|

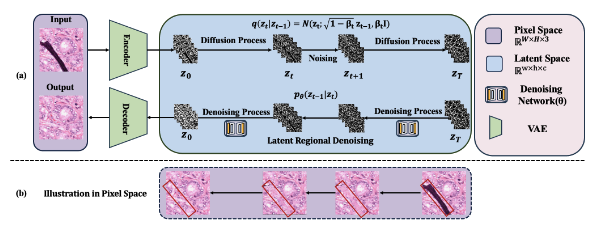

Latent ArtiFusion: a Effective and Efficient Histological Artifacts Restoration Framework

Zhenqi He, Wenrui Liu, Minghao Yin, Kai Han; DGM4MICCAI, 2024 [Paper] / [Code] In this paper, we propose a novel framework, namely LatentArtiFusion, which leverages the latent diffusion model (LDM) to reconstruct histological artifacts with high performance and computational efficiency. Unlike traditional pixel-level diffusion frameworks, LatentArtiFusion executes the restoration process in a lower-dimensional latent space, significantly improving computational efficiency. Through extensive experiments on real-world histology datasets, LatentArtiFusion demonstrates remarkable speed, outperforming state-of-the-art pixel-level diffusion frameworks by more than 30×. |

Academic Service |

Reviewer, MICCAI 2025

Reviewer, MICCAI 2024 Reviewer, DGM4MICCAI 2024 |

Education

|

Ph.D. in Computer Science and Engineering HKUST, Clear Water Bay 2025 - 2029 Advisor: Prof. Long Chen |

|

MSc. in Artificial Intelligence The University of Hong Kong, Hong Kong Sep. 2023 - Jan. 2025 Advisor: Prof. Kai Han Grade: Distinction |

|

BSc in Mathematics (Double Major in Computer Science) The University of Hong Kong, Hong Kong Sep. 2018 - Jun. 2023 |

Experiences

|

Research Intern Hong Kong Observatory, Hong Kong Jan. 2022 – Jan. 2023 |

|

This page is build on source code |